Introduction to Python Generators

Efficiency is crucial in the realm of Python programming. Whether you’re a seasoned developer or just starting your coding journey, understanding how to write efficient code can make a significant difference in your projects. Enter Python generators—a powerful feature that can help you write more memory-efficient and elegant code. In this blog post, we’ll explore the concept of Python generators, how they differ from lists, and why they are a valuable tool in your programming arsenal.

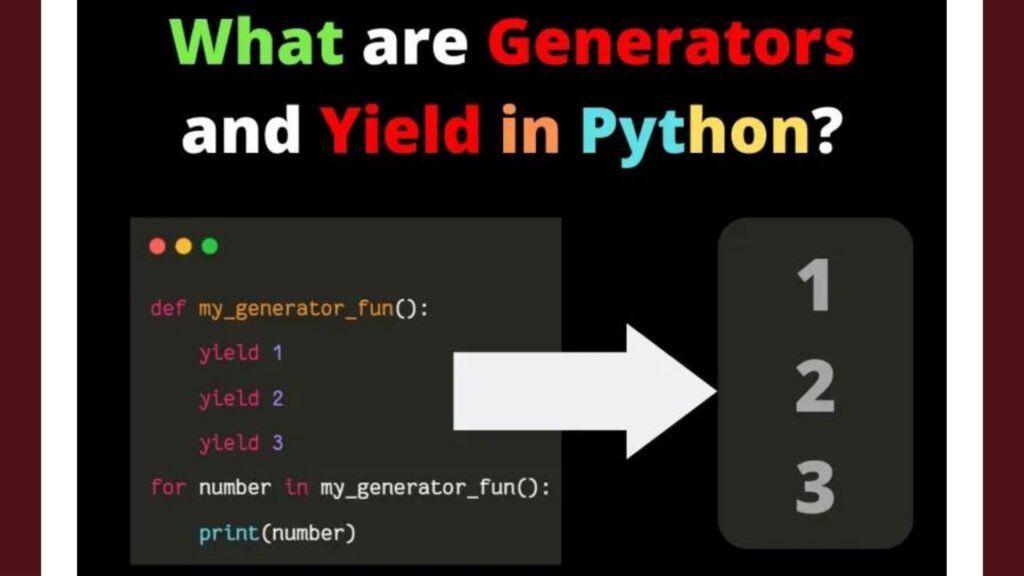

Understanding the ‘yield’ Keyword

At the heart of Python generators lies the `yield` keyword. Unlike regular functions that return a single value and terminate, a function containing the `yield` keyword returns a generator object, which can yield multiple values, one at a time. This allows iteration over a sequence of values, without the need to store the entire sequence in memory.

For example, consider a simple function that generates a sequence of numbers:

def simple_generator():

yield 1

yield 2

yield 3

When you call `simple_generator()`, it doesn’t execute the function immediately. Instead, it returns a generator object that can be iterated over, yielding one value at a time.

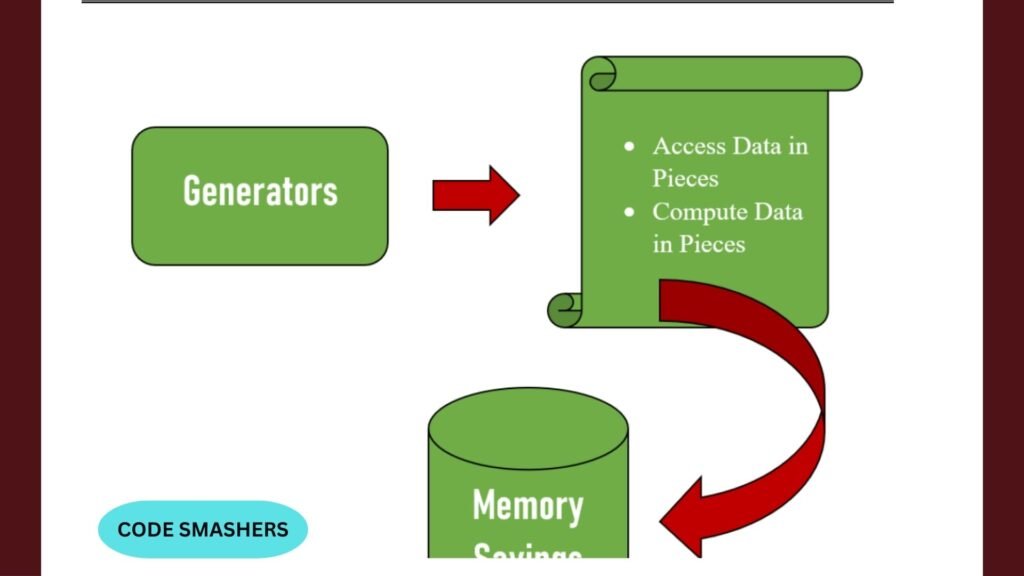

Advantages of Generators over Lists

One of the primary advantages of using generators over lists is memory efficiency. Lists store all their elements in memory simultaneously, which can be a significant overhead when dealing with large datasets. Generators, on the other hand, generate values on-the-fly and only store the current value in memory, making them much more efficient for large data processing tasks.

Another advantage is that generators are inherently lazy. They only produce values as needed, which can lead to performance improvements in scenarios where not all values are required simultaneously. This is particularly useful in applications such as data streaming and real-time data processing.

Use Cases for Python Generators

Data Processing Pipeline

A common use case for Python generators is in data processing pipelines. Imagine working with a large dataset that cannot fit into memory all at once. Generators can process the data in chunks, significantly reducing memory overhead and improving processing times. In one such case study, a data processing project used generators to handle large datasets efficiently, resulting in reduced memory usage and faster processing times.

Web Scraping Tool

Another practical application of generators is in web scraping tools. When extracting data from multiple web pages, you often deal with large amounts of information. By leveraging generators, you can process each page’s data one at a time, maintaining memory efficiency and improving performance. A notable example involves a web scraping tool that used generators to handle data extraction efficiently, showcasing how they can improve both memory usage and performance.

Recursive Search Algorithm

Generators can also be beneficial in recursive operations, such as file search algorithms. Traditional recursion can lead to deeply nested function calls, risking stack overflows. Generators, however, can manage recursive operations without such risks by maintaining state between iterations. In a project implementing a file search algorithm, Python generators were used to handle recursive searches efficiently, demonstrating their ability to manage complex operations seamlessly.

Real-Time Data Streaming

In real-time data streaming applications, generators play a crucial role in managing continuous data streams. By generating data on-the-fly, they enable memory-efficient handling of real-time information. An example of this is an application that leveraged generators to manage real-time data streams, highlighting their effectiveness in creating continuous, memory-efficient pipelines.

Performance Comparison Generators vs. Lists

When it comes to performance, the difference between generators and lists can be substantial. Lists allocate memory upfront for all their elements, which can be costly for large datasets. Generators, on the other hand, generate values as needed, resulting in lower memory consumption and faster execution times for certain tasks.

Memory Usage

Consider a scenario where you need to generate a large sequence of numbers. Using a list, you need to allocate memory for the entire sequence, which can quickly become impractical. With generators, memory usage is minimized, as values are generated on-the-fly.

Execution Time

In terms of execution time, generators can outperform lists in scenarios where not all values are required immediately. By yielding values one at a time, generators avoid the overhead of creating and storing large sequences, leading to improved performance.

Best Practices for Writing Efficient Generators

To make the most of Python generators, it’s essential to follow best practices that ensure efficiency and readability. Here are some tips for writing efficient generators:

- Keep It Simple: Aim for simplicity and readability in your generator functions. Avoid overly complex logic that can make the function difficult to understand.

- Use `yield` Wisely: Use the `yield` keyword judiciously to produce values as needed. Steer clear of pointless {yield} statements as they may result in inefficiencies.

- Handle Exceptions: Make sure the generating routines gracefully handle errors. Use try-except blocks to manage potential errors and maintain the integrity of your generator.

Tips for Efficient Usage of Python Generators

- Use Generators for Large Datasets: When dealing with large datasets that do not fit into memory, prefer generators over lists. This approach allows you to process data incrementally, reducing memory consumption significantly.

- Chain Generators for Complex Pipelines: To create complex data processing pipelines, take advantage of the capability to chain several generators together.This modular approach allows for better organization and reusable, scalable code.

- Combine with Lazy Evaluation Techniques: Pair generators with lazy evaluation techniques provided by libraries like `itertools` to optimize performance further. These techniques allow you to perform operations only when necessary, minimizing computational overhead.

- Integrate with Asynchronous Code: Use generators with asynchronous programming constructs (such as `async` and `await`) for efficient I/O-bound operations. This is particularly effective for applications that involve network requests or file I/O.

- Maintain State with Simple Data Structures: When preserving state across iterations, use simple or immutable data structures to ensure thread safety and efficient state management within your generators.

- Profiling and Testing: Regularly profile generator-based implementations to understand performance bottlenecks. Write thorough tests to ensure your generators behave correctly across all expected scenarios.

By adhering to these tips, developers can ensure that they use Python generators efficiently, maximizing both performance and code quality in their applications.

Real-World Examples of Python Generators

Example 1 Data Processing Pipeline

Imagine you need to process a large log file that cannot fit into memory all at once. With a generator, you can read the file line by line, processing each line as needed, without loading the entire file into memory. Here’s a simple example:

def read_large_file(file_path):

with open(file_path, ‘r’) as file:

for line in file:

yield line

for line in read_large_file(‘large_log_file.txt’):

process_line(line)

Example 2 Web Scraping Tool

Consider a web scraping tool that extracts data from multiple web pages. By using a generator, you can handle the data extraction one page at a time, maintaining memory efficiency:

import requests

def fetch_pages(urls):

for URL in urls:

response = requests.get(url)

yield response.content

urls = [‘http://example.com/page1’, ‘http://example.com/page2’]

for page_content in fetch_pages(urls):

process_content(page_content)

Example 3 Recursive Search Algorithm

In a recursive file search algorithm, using a generator can help manage state between iterations and avoid stack overflows:

import os

def find_files(directory, file_extension):

for root, _, files in os.walk(directory):

for file in files:

if file.endswith(file_extension):

yield os.path.join(root, file)

for file_path in find_files(‘/path/to/directory’, ‘.txt’):

process_file(file_path)

Example 4 Real-Time Data Streaming

In real-time data streaming, generators can be used to handle continuous data streams efficiently:

import random

import time

def generate_data_stream():

while True:

data = random.randint(1, 100)

yield data

time.sleep(1)

for data in generate_data_stream():

process_data(data)

Future Outlook for Python Generators

The future of Python generators looks promising, as developers continue to explore their potential in efficient data processing and resource management. As data sets grow and more applications require real-time processing, generators will likely play a critical role due to their ability to handle large volumes of data with minimal memory overhead. Additionally, as Python’s asynchronous programming capabilities continue to advance, generators should develop and mesh more easily with asynchronous constructs, providing even more performance benefits for tasks involving I/O and concurrency. As the Python community continues to innovate, we can anticipate the development of more sophisticated tools and libraries that leverage generators to solve complex problems, improving developer productivity and application efficiency in many fields.

Conclusion

Python generators are a powerful tool that can significantly enhance the efficiency and performance of your code. By understanding how to use the `yield` keyword and following best practices, you can leverage generators to handle large datasets, manage memory efficiently, and improve performance.

Ready to take your Python skills to the upgrade level? Start experimenting with generators in your projects today and experience the benefits firsthand. Happy coding!